Difference between revisions of "Projects"

(→Projects) |

(→Completed Projects) |

||

| Line 143: | Line 143: | ||

Current checkpoint/restart approach may introduce an unacceptable amount of overhead into some file systems. In collaboration with CSM, we have developed a fault tolerance framework in which the survival application processes will adapt itself to failures. In collaborating with ORNL, we are integrating ADIOS to scale checkpointing up on Lustre file system. [http://www.olcf.ornl.gov/center-projects/adios/ More info] | Current checkpoint/restart approach may introduce an unacceptable amount of overhead into some file systems. In collaboration with CSM, we have developed a fault tolerance framework in which the survival application processes will adapt itself to failures. In collaborating with ORNL, we are integrating ADIOS to scale checkpointing up on Lustre file system. [http://www.olcf.ornl.gov/center-projects/adios/ More info] | ||

| + | </div> | ||

| + | |||

| + | <div id = "right"> | ||

| + | [[Image:shmovcaltech.jpg|left|185x150px|link=]] | ||

| + | |||

| + | ==== Supercomputing On Demand: SDSC Supports Event-Driven Science ==== | ||

| + | |||

| + | HPGeoC supports on-demand CalTech users for urgent science earthquake applications. National Science Foundation (NSF) ACCESS supercomputing resource Trestles is allocated to open this new computing paradigm. We've developed novel ways of utilizing this type of allocation as well as scheduling and job handling procedures. [http://shakemovie.caltech.edu/ More info] | ||

</div> | </div> | ||

Revision as of 07:40, 1 September 2023

Projects

NSF CSA Project

The HPGeoC applications are selected as an early science project for NSF Leadership Class Computing Facility (LCCF), funded through NSF's Characteristic Science Applications (CSA) program. The project is to support the development of state-of-the-art earthquake modeling capabilities towards exascale, by porting and co-designing software on future LCCF systems for machine-specific performance efficiencies. Horizon to be deployed end 2025 will be ten-fold or more time-to-solution performance improvement over Frontera, the current NSF leadership system. More info

Intel oneAPI Center of Excellence

The Intel oneAPI CoE for Earthquake Simulation is an interdisciplinary research center with the goal of modernizing the highly scalable 3D earthquake modeling environment. The modernizations will feature cross-architecture programming to create portable, high-performance code for advanced high-performance computing systems. This project will continue our successful collaboration with Intel to get this state-of-the-art seismic wave propagation code updated to support multivendor and multi-architecture systems for heterogeneous computing. More info

NSF CSSI Project

Optimizing the communication between nodes is key to achieving good performance in scientific applications but a daunting task given the scale of execution. The HPGeoC team is collaborating with OSU, UTK and University of Oregon to co-design MVAPICH2 and TAU libraries with applications to improve time to solution in next generation HPC architectures through intelligent performance engineering - using emerging communication primitives. This research is funded by NSF Office of Advanced Cyberinfrastructure CSSI program. NSF CSSI info

CyberShake SGT Calculation

AWP-ODC is a highly scalable, parallel finite-difference application developed at SDSC and SDSU to simulate dynamic rupture and wave propagation that occurs during an earthquake. We have developed strain Green's tensor (SGT) creation and seismogram synthesis. The GPU-based SGT calculations are recently benchmarked with 32.6 Petaflop/s sustained performance on OLCF Frontier. This improved computational efficiency in the waveform modeling of CyberShake research will accelerate the process of creating a California state-wide physics-based seismic hazard map. More info

SCEC Data Visualization

This project focuses on the visualization of a series of large earthquake simulations in the interest of gaining scientific insight into the impact of Southern San Andreas Fault earthquake scenarios on Southern California. In addition to creating its own software, the group also uses tools installed and maintained on SDSC computational resources. More info

San Andreas Fault Zone Plasticity

Producing realistic seismograms at high frequencies will require several improvements in anelastic wave propagation engines, including the implementation of nonlinear material behavior. This project supports the development of nonlinear material behavior in both the CPU- and GPU-based wave propagation solvers. Simulations of the ShakeOut earthquake scenario have shown that nonlinearity could reduce the earlier predictions of long period (0 - 0.5 Hz) ground motions in the Los Angeles basin by 30-70. More info

Simulating Earthquake Faults (FESD)

HPGeoC Researchers continue assisting researchers from several universities and the US Geological Survey (USGS) to develop detailed, large-scale computer simulations of earthquake faults. The initial focus is on the North American plate boundary and the San Andreas system of Northern and Southern California. More info

Completed Projects

SCEC M8 Simulation

M8 is the largest earthquake simulation ever conducted, a collaborative effort led by SCEC and requiring collaboration of more than 30 seismologists and computational scientists, supported by DOE INCITE allocation award. It presented tremendous computational and I/O challenges. The simulation was conducted on NCCS Jaguar, a ACM Gordon Bell finalist at Supercomputing'10. More info

GPU Acceleration Project

HPGeoC has developed a hybrid CUDA MPI paradigm with AWP-ODC code that achieved 2.3 Pflop/s sustained performance and enabled a rough fault 0-10Hz modeling simulation on ORNL Titan. This code is also used to study the effects of nonlinearity on surface waves during large quakes on the San Andreas fault. More info

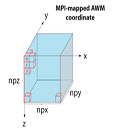

Topology-aware Communication and Scheduling (HECURA-2)

Topology-aware MPI communication, mapping, and scheduling is a new research area. This is to take advantage of the topological path to communication optimization  (either point-to-point or collective). We are participating in a joint project between OSU, TACC and SDSC as a case study in how to implement new topology-aware MPI software at the application level.

Petascale Inference in Earthquake System Science (PetaShake-2)

This is a SCEC project with cross-disciplinary, multi-institutional CME Collaboration. We are providing a platform-independent petascale earthquake application that is able to enlist petascale computing to tackle PetaShake problems through a graduated series of milestone calculations. More info

Petascale Cyberfacility for Physics-based Seismic Hazard Analysis (PetaSHA-3)

The SCEC PetaSHA-3 project is sponsored by NSF to provide society with better predictions of earthquake hazards. This project will provide the high- performance computing required to achieve the objectives for earthquake source physics and ground motion prediction outlined in the SCEC3 (2007-2012) research plan. More info

Blue Waters PAID

Supported by NSF Petascale Application Improvement Discovery (PAID) program through NCSA, this project will tune the SCEC community code AWP-ODC on Blue Waters for performance efficiency on Kepler. Our research will investigate how earthquake ruptures produce high frequency ground motions. High frequency ground motions are known to have an important impact on seismic hazards. Existing HPC systems cannot achieve the physical scale range needed to explore the source of high frequencies. More info

Fault Tolerance Project

Current checkpoint/restart approach may introduce an unacceptable amount of overhead into some file systems. In collaboration with CSM, we have developed a fault tolerance framework in which the survival application processes will adapt itself to failures. In collaborating with ORNL, we are integrating ADIOS to scale checkpointing up on Lustre file system. More info

Supercomputing On Demand: SDSC Supports Event-Driven Science

HPGeoC supports on-demand CalTech users for urgent science earthquake applications. National Science Foundation (NSF) ACCESS supercomputing resource Trestles is allocated to open this new computing paradigm. We've developed novel ways of utilizing this type of allocation as well as scheduling and job handling procedures. More info

Finished Projects

- PetaShake-1 Advanced computational platform designed to support high-resolution simulations of large earthquakes on initial NSF petascale machines, supported by NSF OCI and GEO grant

- HECURA-1 In collaboration with OSU and TACC, we developed non-blocking one-sided and two-sided communication and computation/communication overlap to improve the parallel efficiency of SCEC seismic applications.

- PetaSHA-1/2Cross-disciplinary, multi-institutional collaboration, coordinated by SCEC, each 2-year EAR/IF project with the same name to develop a cyberfacility with a common simulation framework for executing SHA computational pathways

- TeraShake The TeraShake Simulations model the rupture of a 230 kilometer stretch of the San Andreas fault and the consequent 7.7 magnitude earthquake. TeraShake wasa multi-institution collaboration led by SCEC/CME.

- Shakeout The Great California Shakeout is a statewide earthquake drill. It is held in October each year and serves as preparation for what to do before, during, and after an earthquake.

- Parallelization of Regional Spectral Method (RSM)

High Performance Computing Allocations

- NSF ACCESS Allocation

- DOE INCITE Allocation

- NSF LRAC Allocation

- TACC CSA Allocation

- NERSC Allocation

- SDSC Expanse DD